PanGu-π: Enhancing Language Model Architectures via Nonlinearity Compensation

Introduction

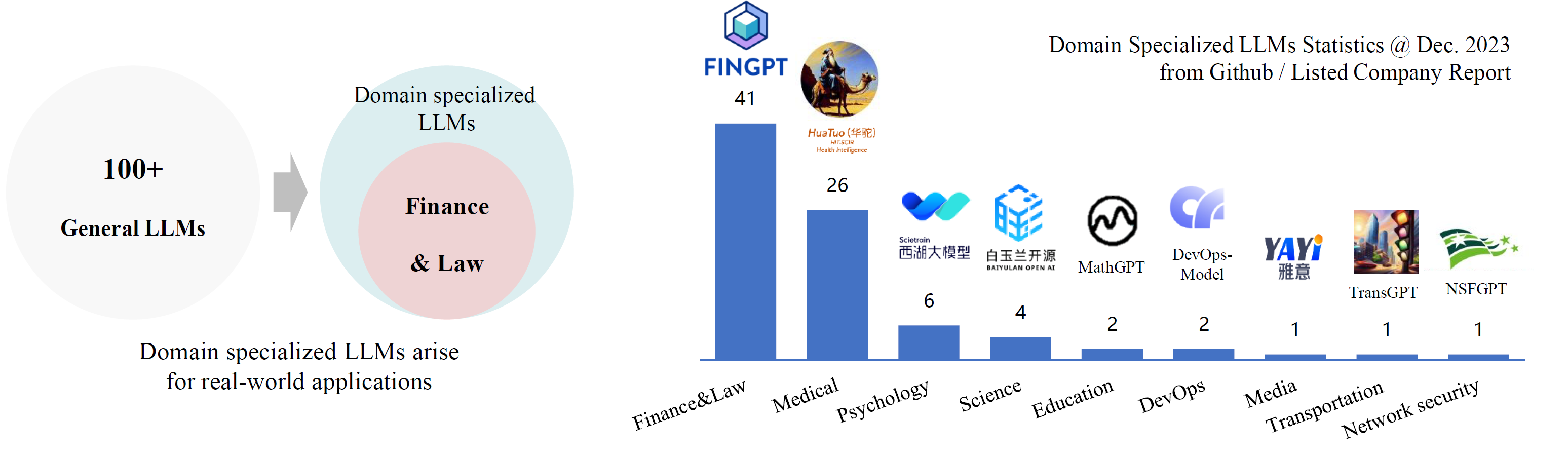

We introduce PanGu-π, a new architecture for Large Language Model. As the world of Large Language Models (LLMs) continues to evolve with larger models and datasets for enhanced performance, the critical aspect of LLM architecture improvement often remains overlooked. PanGu-π addresses this gap by introducing modules that significantly enhance nonlinearity, thereby greatly boosting the model's expressive capabilities. Achieving leading performance and efficiency in both 7B and 1B model scales, PanGu-π is a testament to the power of architectural innovation in LLMs. Further extending its impact, the specialized YunShan model is making waves in high-value domains such as finance and law, showcasing the practical and powerful application of this groundbreaking technology.